What a fantastic weekend! The band came back together, and Dominic and Raven hit it out of the park again with a successful VisionDevCamp at the UCSC Silicon Valley Extension campus in Santa Clara, California. As I wrote two weeks ago, I’ve been attending their developer camps since 2007, and they have never disappointed. Like the first iPadDevCamp in 2010 — where a team of us created the Autumn Gem Preview app — I came in with a very clear vision (pun intended) on what I wanted to make. MixEffect Vision is a native app for Apple Vision Pro that creates a virtual ATEM switcher to control Blackmagic Design live production switchers.

Before I started writing anything for the hackathon, I first needed to decide whether or not I was going to adapt my existing code from MixEffect, or copy and paste chunks of it into a brand-new project. I had attended an Apple Vision Pro developer lab months ago — well prior to the general release — and I left with little to show due to my inability to build against the native Apple Vision Pro target in Xcode. Because of that experience, my first inclination was to start a brand-new project. Several hours in, I realized that doing so would require too much scaffolding building — drudgery work just to get something simple done — so I returned my attention to retrofitting the original project. On Saturday mid-afternoon, I finally figured out what was causing the problem (1), making the it clear that pivoting back to the original codebase was the correct path to take.

This is something common with software development as a whole. You go down this one road (new project) thinking it might make things easier for you, only to realize a third of the way there that adapting from an existing codebase (old project) might actually be easier and faster. With over three years of code in MixEffect, I wouldn’t have to write the plumbing again if I could just figure out how to change the pathway such that the app did something different when connected to Apple Vision Pro instead of an iPad, iPhone, or Mac.

Next, big thanks out to John Haney, Ed Arenberg, Lance LeMond, and James Ashley for helping me understand how RealityKit and Reality Composer Pro work with Swift and SwiftUI. Once I got my X, Y, and Z bearings down, it was relatively straightforward how to connect the 3D model with SwiftUI and make it do things when the user gazed on a button or pinched their index and thumb together.

The virtual ATEM has several things lacking in a physical device like an ATEM Mini Extreme ISO. For instance, there’s two DSK On Air toggle buttons. The original impetus for developing MixEffect was because I wanted a physical DSK On Air button on the ATEM Mini Pro. The Mini Extreme ISO has just one DSK button, even though the hardware supports two. There’s a T-Bar level in MixEffect Vision that doesn’t exist on the Mini Extreme nor the 2 M/E Constellation HD switcher. Admittedly, it’s a flat SwiftUI view overlaid on a 3D model, but that’s only because I didn’t have time to create a three-dimensional T-Bar. Finally, there’s four dedicated buttons that execute various SuperSource presets from MixEffect.

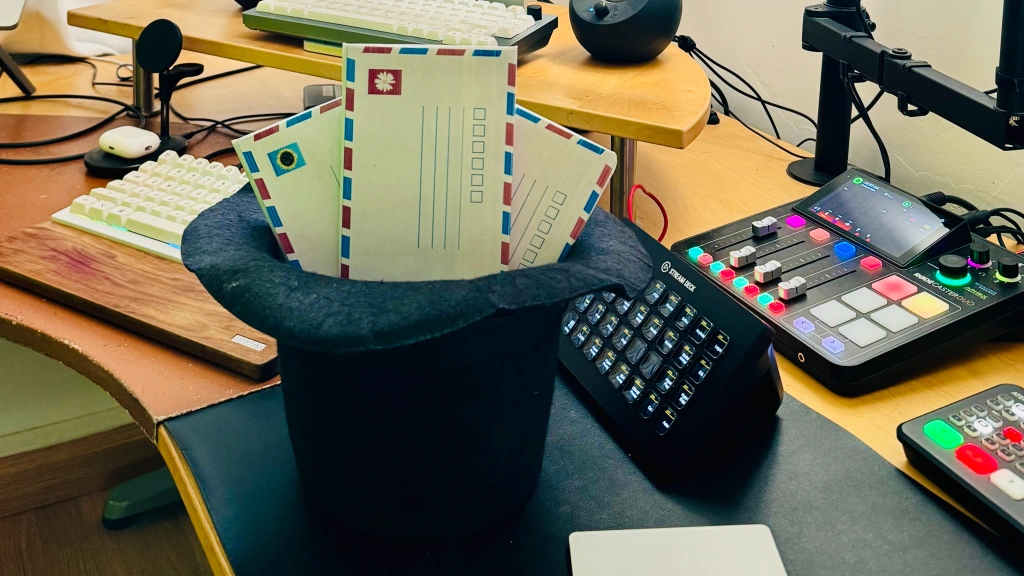

I was able to finish the project more or less by Saturday night, and I spent Sunday morning adding a few buttons and flourishes for the video you see above. One thing that the video shows is a virtual monitor above the switcher. I was able to do this since I was feeding the simulator window as an input into my ATEM 2 M/E Constellation HD. I piped that video feed into an upstream keyer (USK) that I could enlarge to remove the window chrome. Next, I created another USK that was set to the program output of the second Mix Effects Bus on the ATEM. In the real app, the video feed would be coming into MixEffect Vision from the network via NDI, VDO.Ninja, or a companion Mac app. Since the Apple Vision Pro lacks any kind of hardware input aside from Wi-FI (and the $300 developer strap), I can’t just plug in an HDMI or SDI cable into it!

All that hard but fun work paid off! MixEffect Vision won the Most Creative award at the VisionDevCamp hackathon, and I received an orange HomePod mini as the prize. Believe it or not, I have never owned any of the HomePod smart speakers from Apple, so I’m very happy to have received this!

MixEffect Vision wasn’t the only thing that I was working on this weekend. On Sunday morning, after several days of back and forth with App Review, my next app was approved. If you are a heavy user of Apple Photos on the Mac, with lots of albums and folders, you’ll want to keep your eyes peeled here for an announcement later this week.

Conference season has officially kicked off; next up is Deep Dish Swift in May, followed by WWDC in June.

- Foundation includes NotificationCenter, so I didn’t need to have

import NotificationCenterin my project files.

Leave a comment